Tuesday, 25 October

Fast rates for prediction with costly or limited expert advice (Gilles Blanchard - University Paris-Saclay)

Abstract:

Given a finite family of experts or predictors for forecasting a target variable of interest, we consider the classical problem of minimizing the excess error with respect to the best expert. We will first review classical results under full information in the stochastic setting (observations and fully observed predictions are assumed random and independent across time), and when the goal concerns prediction of a single new instance. It is known in this case that under some assumptions on the prediction loss, it is possible to get "fast" learning rates (in expectation and with large probability) for the excess prediction risk, namely of order 1/N where N is the number of observations (training rounds). We will study the possibility of fast rates under the additional constraint that the number of experts consulted each round is limited, or that there is a limited total "expert query budget" for the learning phase. We show that if we are allowed to see the advice of only one expert per round for N rounds in the training phase, or to use the advice of only one expert for prediction in the test phase, the worst-case excess risk in the prediction phase must have a "slow rate" (1/sqrt(N)) at best. However, it is sufficient that we are allowed to observe at least two actively chosen experts per training round and use at least two experts for prediction, for the "fast rate" of order 1/N to be achievable. Given time, we will also discuss a similar question in the setting of individual sequence prediction, the goal then being to minimize cumulated excess risk (regret).

Bio: Gilles Blanchard obtained his Ph.D. in 2001, and held subsequently positions as a researcher at the french CNRS, at the Fraunhofer Institute and the Weierstrass Institute in Berlin, then as a professor (chair of statistics) at the institute of mathematics of the university of Postsdam (2010-2018), and is since 2018 professor at the institute of mathematics of the university Paris-Saclay. He works at the crossroads of statistics and machine learning, and has in particular made contributions in the themes of decision trees, kernel methods, dimensionality reduction, multiple testing, label contamination models, model selection, and more recently on learning methods under efficiency or frugality constraints.

Given a finite family of experts or predictors for forecasting a target variable of interest, we consider the classical problem of minimizing the excess error with respect to the best expert. We will first review classical results under full information in the stochastic setting (observations and fully observed predictions are assumed random and independent across time), and when the goal concerns prediction of a single new instance. It is known in this case that under some assumptions on the prediction loss, it is possible to get "fast" learning rates (in expectation and with large probability) for the excess prediction risk, namely of order 1/N where N is the number of observations (training rounds). We will study the possibility of fast rates under the additional constraint that the number of experts consulted each round is limited, or that there is a limited total "expert query budget" for the learning phase. We show that if we are allowed to see the advice of only one expert per round for N rounds in the training phase, or to use the advice of only one expert for prediction in the test phase, the worst-case excess risk in the prediction phase must have a "slow rate" (1/sqrt(N)) at best. However, it is sufficient that we are allowed to observe at least two actively chosen experts per training round and use at least two experts for prediction, for the "fast rate" of order 1/N to be achievable. Given time, we will also discuss a similar question in the setting of individual sequence prediction, the goal then being to minimize cumulated excess risk (regret).

Bio: Gilles Blanchard obtained his Ph.D. in 2001, and held subsequently positions as a researcher at the french CNRS, at the Fraunhofer Institute and the Weierstrass Institute in Berlin, then as a professor (chair of statistics) at the institute of mathematics of the university of Postsdam (2010-2018), and is since 2018 professor at the institute of mathematics of the university Paris-Saclay. He works at the crossroads of statistics and machine learning, and has in particular made contributions in the themes of decision trees, kernel methods, dimensionality reduction, multiple testing, label contamination models, model selection, and more recently on learning methods under efficiency or frugality constraints.

Learning Dynamical Systems via Koopman Operator Regression in Reproducing Kernel Hilbert Spaces (Vladimir R. Kostic - Italian Institute of Technology)

Abstract:

In the last couple of years the Koopman operator framework attracted a lot of attention due to growing use of data-driven dynamical systems in science and engineering. Relevant results span fields of functional analysis, numerical algorithms, as well as machine and, in particular, deep learning. In this talk we will focus on discrete dynamical systems modelled by Markov chains and their study through the corresponding Koopman operator. While many data-driven algorithms to reconstruct such operators are well known, their relationship with statistical learning is largely unexplored. Hence, our main goal in this talk is to formalise a framework to learn the Koopman operator from the finite data sample from the dynamical system. We will limit to an important case when the Markov chain admits an invariant distribution and, relying on the theory of reproducing kernel Hilbert spaces (RKHS), introduce a notion of risk from which different estimators naturally arise. Special attention will be given to the estimation of the Koopman spectral properties, and in particular its modal decomposition (KMD) that is of paramount importance in practice. With this framework we will analyse, theoretically and empirically, some existing estimators, e.g. (extended) dynamic mode decomposition (DMD), but also introduce a novel reduced-rank operator regression (RRR) estimator. We will further present learning bounds for the proposed estimator, holding for the data drawn i.i.d. from the invariant distribution, as well as for non i.i.d. data gathered from the trajectories of the dynamical system, the latter in terms of mixing coefficients. On the empirical side, we will validate the derived bounds and see that RRR may be beneficial over other widely used estimators. Finally, we will showcase several possible applications of the estimated KMD in applications beyond forecasting.

Bio: Vladimir R. Kostic is a researcher in the Computational Statistics and Machine Learning group at Istituto Italiano di Tecnologia, Genova, Italy. He received his PhD in Numerical Mathematics in 2010 at University of Novi Sad, Serbia where he is also associate professor. His scientific contributions are across the fields of Numerical Linear Algebra, Optimization, Machine Learning and include applications in engineering, environmental sciences and ecology. Current interests are the in the areas or computational optimal transport and learning dynamical systems.

In the last couple of years the Koopman operator framework attracted a lot of attention due to growing use of data-driven dynamical systems in science and engineering. Relevant results span fields of functional analysis, numerical algorithms, as well as machine and, in particular, deep learning. In this talk we will focus on discrete dynamical systems modelled by Markov chains and their study through the corresponding Koopman operator. While many data-driven algorithms to reconstruct such operators are well known, their relationship with statistical learning is largely unexplored. Hence, our main goal in this talk is to formalise a framework to learn the Koopman operator from the finite data sample from the dynamical system. We will limit to an important case when the Markov chain admits an invariant distribution and, relying on the theory of reproducing kernel Hilbert spaces (RKHS), introduce a notion of risk from which different estimators naturally arise. Special attention will be given to the estimation of the Koopman spectral properties, and in particular its modal decomposition (KMD) that is of paramount importance in practice. With this framework we will analyse, theoretically and empirically, some existing estimators, e.g. (extended) dynamic mode decomposition (DMD), but also introduce a novel reduced-rank operator regression (RRR) estimator. We will further present learning bounds for the proposed estimator, holding for the data drawn i.i.d. from the invariant distribution, as well as for non i.i.d. data gathered from the trajectories of the dynamical system, the latter in terms of mixing coefficients. On the empirical side, we will validate the derived bounds and see that RRR may be beneficial over other widely used estimators. Finally, we will showcase several possible applications of the estimated KMD in applications beyond forecasting.

Bio: Vladimir R. Kostic is a researcher in the Computational Statistics and Machine Learning group at Istituto Italiano di Tecnologia, Genova, Italy. He received his PhD in Numerical Mathematics in 2010 at University of Novi Sad, Serbia where he is also associate professor. His scientific contributions are across the fields of Numerical Linear Algebra, Optimization, Machine Learning and include applications in engineering, environmental sciences and ecology. Current interests are the in the areas or computational optimal transport and learning dynamical systems.

Online Learning and Potential Functions (Yoav Freund - UCSD)

Abstract:

Potential functions have long been used as a tool in the design and analysis of online learning algorithms. However, choosing the best potential function requires a-priori assumptions. In this talk we show a one-to-one relationship between potential functions and simultaneous bounds on the regret. Using this relationship we design a parameter-free optimal online learning algorithm for an extended version of decision theoretic online learning. The continuous-time limit of this online learning results in a version of the online learning that can be applied to Ito's processes.

Bio: Yoav Freund is a professor of Computer Science and Engineering at UC San Diego. His work is in the area of machine learning, computational statistics and their applications. Dr. Freund is an internationally known researcher in the field of machine learning, a field which bridges computer science and statistics. He is best known for his joint work with Dr. Robert Schapire on the Adaboost algorithm. For this work they were awarded the 2003 Gödel prize in Theoretical Computer Science, as well as the Kanellakis Prize in 2004.

Potential functions have long been used as a tool in the design and analysis of online learning algorithms. However, choosing the best potential function requires a-priori assumptions. In this talk we show a one-to-one relationship between potential functions and simultaneous bounds on the regret. Using this relationship we design a parameter-free optimal online learning algorithm for an extended version of decision theoretic online learning. The continuous-time limit of this online learning results in a version of the online learning that can be applied to Ito's processes.

Bio: Yoav Freund is a professor of Computer Science and Engineering at UC San Diego. His work is in the area of machine learning, computational statistics and their applications. Dr. Freund is an internationally known researcher in the field of machine learning, a field which bridges computer science and statistics. He is best known for his joint work with Dr. Robert Schapire on the Adaboost algorithm. For this work they were awarded the 2003 Gödel prize in Theoretical Computer Science, as well as the Kanellakis Prize in 2004.

A Theory of Weak-Supervision and Zero-Shot Learning (Eli Upfal - University of Brown)

Abstract:

Labeled training data is often scarce, unavailable, or can be very costly to obtain. To circumvent this problem, there is a growing interest in developing methods that can exploit sources of information other than labeled data, such as weak-supervision and zero-shot learning. While these techniques obtained impressive accuracy in practice, both for vision and language domains, they come with no theoretical characterization of their accuracy. In a sequence of recent works, we develop a rigorous mathematical framework for constructing and analyzing algorithms that combine multiple sources of related data to solve a new learning task. Our learning algorithms provably converge to models that have minimum empirical risk with respect to an adversarial choice over feasible labelings for a set of unlabeled data, where the feasibility of a labeling is computed through constraints defined by rigorously estimated statistics of the sources. Notably, these methods do not require the related sources to have the same labeling space as the multiclass classification task. We demonstrate the effectiveness of our approach with experimentations on various image classification tasks.

Bio: Eli Upfal is the Rush C. Hawkins University professor of Computer Science at Brown University. He joined Brown in 1998, and was the CS department chair from 2002 to 2007. Professor Upfal's research focuses on statistical data analysis and the design and analysis of algorithms, in particular randomized algorithms and probabilistic analysis of algorithms, with applications ranging from statistical learning theory to computational biology, and computational finance. Professor Upfal is a fellow of the IEEE and the Association for Computing Machinery (ACM). His work at Brown has been funded in part by the National Science Foundation (NSF), The Defense Advanced Research Projects Agency (DARPA), The office of Naval Research (ONR), National Institute of Health (NIH), IBM, Yahoo!, and Two Sigma. Upfal co-authored a popular textbook ``Probability and Computing: Randomized Algorithms and Probabilistic Analysis'' (with M. Mitzenmacher), Cambridge University Press 2005, 2018, 2007 (Chinese), 2008 (Japanese), and 2009 (Polish).

Labeled training data is often scarce, unavailable, or can be very costly to obtain. To circumvent this problem, there is a growing interest in developing methods that can exploit sources of information other than labeled data, such as weak-supervision and zero-shot learning. While these techniques obtained impressive accuracy in practice, both for vision and language domains, they come with no theoretical characterization of their accuracy. In a sequence of recent works, we develop a rigorous mathematical framework for constructing and analyzing algorithms that combine multiple sources of related data to solve a new learning task. Our learning algorithms provably converge to models that have minimum empirical risk with respect to an adversarial choice over feasible labelings for a set of unlabeled data, where the feasibility of a labeling is computed through constraints defined by rigorously estimated statistics of the sources. Notably, these methods do not require the related sources to have the same labeling space as the multiclass classification task. We demonstrate the effectiveness of our approach with experimentations on various image classification tasks.

Bio: Eli Upfal is the Rush C. Hawkins University professor of Computer Science at Brown University. He joined Brown in 1998, and was the CS department chair from 2002 to 2007. Professor Upfal's research focuses on statistical data analysis and the design and analysis of algorithms, in particular randomized algorithms and probabilistic analysis of algorithms, with applications ranging from statistical learning theory to computational biology, and computational finance. Professor Upfal is a fellow of the IEEE and the Association for Computing Machinery (ACM). His work at Brown has been funded in part by the National Science Foundation (NSF), The Defense Advanced Research Projects Agency (DARPA), The office of Naval Research (ONR), National Institute of Health (NIH), IBM, Yahoo!, and Two Sigma. Upfal co-authored a popular textbook ``Probability and Computing: Randomized Algorithms and Probabilistic Analysis'' (with M. Mitzenmacher), Cambridge University Press 2005, 2018, 2007 (Chinese), 2008 (Japanese), and 2009 (Polish).

The Role of Convexity in Data-Driven Decision-Making (Peyman Mohajerin - Delft University of Technology)

Abstract:

In this talk, we study a general class of data-driven decision-making problems and discuss different terminologies and research questions that emerge in this context. As a decision mechanism (the mapping from data to decisions), we introduce a broad class data-driven optimization known as distributionally robust optimization. We then highlight three different aspects of computation, statistics, and real-time implementation of these problems, and elaborate on how convexity can help in each of these aspects. A particular focus will be given to real-time implementation, which is closely connected to the topic of Online Optimization.

Bio: Peyman Mohajerin Esfahani is an associate professor at the Delft Center for Systems and Control. He joined TU Delft in October 2016 as an assistant professor. Prior to that, he held several research appointments at EPFL, ETH Zurich, and MIT between 2014 and 2016. He received the BSc and MSc degrees from Sharif University of Technology, Iran, and the PhD degree from ETH Zurich. He currently serves as an associate editor of Operations Research, Transactions on Automatic Control, and Open Journal of Mathematical Optimization. He was one of the three finalists for the Young Researcher Prize in Continuous Optimization awarded by the Mathematical Optimization Society in 2016, and a recipient of the 2016 George S. Axelby Outstanding Paper Award from the IEEE Control Systems Society. He received the ERC Starting Grant and the INFORMS Frederick W. Lanchester Prize in 2020. He is the recipient of the 2022 European Control Award.

In this talk, we study a general class of data-driven decision-making problems and discuss different terminologies and research questions that emerge in this context. As a decision mechanism (the mapping from data to decisions), we introduce a broad class data-driven optimization known as distributionally robust optimization. We then highlight three different aspects of computation, statistics, and real-time implementation of these problems, and elaborate on how convexity can help in each of these aspects. A particular focus will be given to real-time implementation, which is closely connected to the topic of Online Optimization.

Bio: Peyman Mohajerin Esfahani is an associate professor at the Delft Center for Systems and Control. He joined TU Delft in October 2016 as an assistant professor. Prior to that, he held several research appointments at EPFL, ETH Zurich, and MIT between 2014 and 2016. He received the BSc and MSc degrees from Sharif University of Technology, Iran, and the PhD degree from ETH Zurich. He currently serves as an associate editor of Operations Research, Transactions on Automatic Control, and Open Journal of Mathematical Optimization. He was one of the three finalists for the Young Researcher Prize in Continuous Optimization awarded by the Mathematical Optimization Society in 2016, and a recipient of the 2016 George S. Axelby Outstanding Paper Award from the IEEE Control Systems Society. He received the ERC Starting Grant and the INFORMS Frederick W. Lanchester Prize in 2020. He is the recipient of the 2022 European Control Award.

Wednesday, 26 October

On how PAC-Bayesian Bounds Help to Better Understand (and Improve) Bayesian Machine Learning (Andrés Masegosa - Aalborg University)

Abstract:

Bayesian statistics were quickly adopted by the machine learning community from the very beginning of the area to design novel learning algorithms. The Bayesian approach in machine learning usually starts with the specification of a probabilistic model class, aiming to describe the data generating process, and a Bayesian prior, aiming to reflect our subjective or prior knowledge about the data generating process. In this context, learning reduces to compute the Bayesian posterior, which is then used to make predictions through Bayesian model averaging. In this talk, we will discuss how PAC-Bayesian bounds can help us to better understand the Bayesian approach to machine learning, as they provide a generalization perspective on Bayesian methods. For example, we will show in simple and intuitive terms that Bayesian model averaging provides suboptimal generalization performance when the model class is misspecified. And we will also discuss, with help of PAC-Bayesian bounds, why Bayesian methods need regularizing priors, which usually takes the form of zero centered Gaussian distributions penalizing large-norm parameters, to improve generalization performance. And, how PAC-Bayesian bounds provide an excellent guide for designing optimal priors in terms of generalisation performance.

Bio: Andrés R. Masegosa is Associate Professor of Computer Science at Aalborg University in Copenhagen, Denmark. He has a PhD in Computer Science from the University of Granada (2009). Previously, he was assistant professor at University of Almería (Spain) and he has been a visiting researcher at Technical University of Berlin (2017,2018) and the University of Copenhagen (2019). His research interests are in the broad area of Probabilistic Machine Learning, more specifically in the application of Bayesian statistics to develop machines that are able to learn from previous experience. He has published more than sixty papers in international journals and conferences, many of them in top venues like NeurIPS, ICML, UAI, SIGIR, IEEE-CIM, IEEE-TSMC-Part B, Neurocomputing, etc. He has been PI or co-PI of several research projects in the context of machine learning. In 2017, he was awarded a prestigious early-stage researcher grant by the Spanish government. He belongs to the Program Committee of most of several top conferences in machine learning. Web: https://andresmasegosa.github.io/

Bayesian statistics were quickly adopted by the machine learning community from the very beginning of the area to design novel learning algorithms. The Bayesian approach in machine learning usually starts with the specification of a probabilistic model class, aiming to describe the data generating process, and a Bayesian prior, aiming to reflect our subjective or prior knowledge about the data generating process. In this context, learning reduces to compute the Bayesian posterior, which is then used to make predictions through Bayesian model averaging. In this talk, we will discuss how PAC-Bayesian bounds can help us to better understand the Bayesian approach to machine learning, as they provide a generalization perspective on Bayesian methods. For example, we will show in simple and intuitive terms that Bayesian model averaging provides suboptimal generalization performance when the model class is misspecified. And we will also discuss, with help of PAC-Bayesian bounds, why Bayesian methods need regularizing priors, which usually takes the form of zero centered Gaussian distributions penalizing large-norm parameters, to improve generalization performance. And, how PAC-Bayesian bounds provide an excellent guide for designing optimal priors in terms of generalisation performance.

Bio: Andrés R. Masegosa is Associate Professor of Computer Science at Aalborg University in Copenhagen, Denmark. He has a PhD in Computer Science from the University of Granada (2009). Previously, he was assistant professor at University of Almería (Spain) and he has been a visiting researcher at Technical University of Berlin (2017,2018) and the University of Copenhagen (2019). His research interests are in the broad area of Probabilistic Machine Learning, more specifically in the application of Bayesian statistics to develop machines that are able to learn from previous experience. He has published more than sixty papers in international journals and conferences, many of them in top venues like NeurIPS, ICML, UAI, SIGIR, IEEE-CIM, IEEE-TSMC-Part B, Neurocomputing, etc. He has been PI or co-PI of several research projects in the context of machine learning. In 2017, he was awarded a prestigious early-stage researcher grant by the Spanish government. He belongs to the Program Committee of most of several top conferences in machine learning. Web: https://andresmasegosa.github.io/

Convergence of Nearest Neighbour Classification (Sanjoy Dasgupta - UCSD)

Abstract:

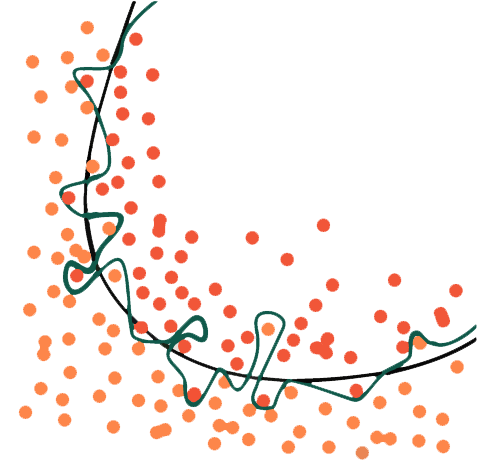

The "nearest neighbor (NN) classifier" labels a new data instance by taking a majority vote over the k most similar instances seen in the past. With an appropriate setting of k, it is capable of modeling arbitrary decision rules. Traditional convergence analysis for nearest neighbor, as well as other nonparametric estimators, has focused on two questions: (1) universal consistency---that is, convergence (as the number of data points goes to infinity) to the best-possible classifier without any conditions on the data-generating distribution---and (2) rates of convergence that are minimax-optimal, assuming that data distribution lies within some standard class of smooth functions. We will describe existing results as well as advancing what is known on both these fronts. But we also show how to attain significantly stronger types of results: (3) rates of convergence that are accurate for the specific data distribution, rather than being generic for a smoothness class, and (4) rates that are accurate for the distribution as well as the specific query point. Along the way, we introduce a notion of "margin" for nearest neighbor classification. This is a function m(x) that assigns a positive real number to every point in the input space; and the size of the data set needed for NN (with adaptive choice of k) to predict correctly at x is, roughly, 1/m(x). The statistical background needed for understanding these results is minimal, and will be introduced during the talk.

Bio: Sanjoy Dasgupta is a Professor in the Department of Computer Science and Engineering at UC San Diego. He received his B.A. in Computer Science from Harvard in 1993, his PhD from Berkeley in 2000, and spent two years at AT&T Research Labs before joining UCSD. His area of research is algorithmic statistics, with a focus on unsupervised and minimally supervised learning. He is the author of a textbook, Algorithms (with Christos Papadimitriou and Umesh Vazirani), which appeared in 2006.

The "nearest neighbor (NN) classifier" labels a new data instance by taking a majority vote over the k most similar instances seen in the past. With an appropriate setting of k, it is capable of modeling arbitrary decision rules. Traditional convergence analysis for nearest neighbor, as well as other nonparametric estimators, has focused on two questions: (1) universal consistency---that is, convergence (as the number of data points goes to infinity) to the best-possible classifier without any conditions on the data-generating distribution---and (2) rates of convergence that are minimax-optimal, assuming that data distribution lies within some standard class of smooth functions. We will describe existing results as well as advancing what is known on both these fronts. But we also show how to attain significantly stronger types of results: (3) rates of convergence that are accurate for the specific data distribution, rather than being generic for a smoothness class, and (4) rates that are accurate for the distribution as well as the specific query point. Along the way, we introduce a notion of "margin" for nearest neighbor classification. This is a function m(x) that assigns a positive real number to every point in the input space; and the size of the data set needed for NN (with adaptive choice of k) to predict correctly at x is, roughly, 1/m(x). The statistical background needed for understanding these results is minimal, and will be introduced during the talk.

Bio: Sanjoy Dasgupta is a Professor in the Department of Computer Science and Engineering at UC San Diego. He received his B.A. in Computer Science from Harvard in 1993, his PhD from Berkeley in 2000, and spent two years at AT&T Research Labs before joining UCSD. His area of research is algorithmic statistics, with a focus on unsupervised and minimally supervised learning. He is the author of a textbook, Algorithms (with Christos Papadimitriou and Umesh Vazirani), which appeared in 2006.

Majorizing Measures, Codes, and Information (Maxim Raginsky - University of Illinois at Urbana-Champaign)

Abstract:

The majorizing measure theorem of Fernique and Talagrand remains one of the deepest and most fundamental results in the theory of stochastic processes, with important applications in statistical learning theory. It relates the boundedness of stochastic processes indexed by elements of a metric space to complexity measures pertaining to certain multiscale combinatorial structures, such as packing and covering trees. In this talk, building on the ideas first outlined in a little-noticed preprint of Andreas Maurer, I will present an information-theoretic perspective on the majorizing measure theorem, according to which the boundedness of stochastic processes is intimately tied to the existence of efficient codes for the elements of the indexing metric space. I will discuss the implications of this perspective to some key concerns in statistical learning theory, such as generalization guarantees of learning algorithms.

Bio: Maxim Raginsky received the B.S. and M.S. degrees in 2000 and the Ph.D. degree in 2002 from Northwestern University, all in Electrical Engineering. He has held research positions with Northwestern, the University of Illinois at Urbana-Champaign (where he was a Beckman Foundation Fellow from 2004 to 2007), and Duke University. In 2012, he has returned to the UIUC, where he is currently a Professor and William L. Everitt Fellow with the Department of Electrical and computer Engineering and the Coordinated Science Laboratory. He also holds a courtesy appointment with the Department of Computer Science. Prof. Raginsky's interests cover probability and stochastic processes, deterministic and stochastic control, machine learning, optimization, and information theory. Much of his recent research is motivated by fundamental questions in modeling, learning, and simulation of nonlinear dynamical systems, with applications to advanced electronics, autonomy, and artificial intelligence. He served as a Program Co-Chair of the 2022 Conference on Learning Theory (COLT).

The majorizing measure theorem of Fernique and Talagrand remains one of the deepest and most fundamental results in the theory of stochastic processes, with important applications in statistical learning theory. It relates the boundedness of stochastic processes indexed by elements of a metric space to complexity measures pertaining to certain multiscale combinatorial structures, such as packing and covering trees. In this talk, building on the ideas first outlined in a little-noticed preprint of Andreas Maurer, I will present an information-theoretic perspective on the majorizing measure theorem, according to which the boundedness of stochastic processes is intimately tied to the existence of efficient codes for the elements of the indexing metric space. I will discuss the implications of this perspective to some key concerns in statistical learning theory, such as generalization guarantees of learning algorithms.

Bio: Maxim Raginsky received the B.S. and M.S. degrees in 2000 and the Ph.D. degree in 2002 from Northwestern University, all in Electrical Engineering. He has held research positions with Northwestern, the University of Illinois at Urbana-Champaign (where he was a Beckman Foundation Fellow from 2004 to 2007), and Duke University. In 2012, he has returned to the UIUC, where he is currently a Professor and William L. Everitt Fellow with the Department of Electrical and computer Engineering and the Coordinated Science Laboratory. He also holds a courtesy appointment with the Department of Computer Science. Prof. Raginsky's interests cover probability and stochastic processes, deterministic and stochastic control, machine learning, optimization, and information theory. Much of his recent research is motivated by fundamental questions in modeling, learning, and simulation of nonlinear dynamical systems, with applications to advanced electronics, autonomy, and artificial intelligence. He served as a Program Co-Chair of the 2022 Conference on Learning Theory (COLT).

Beyond Empirical Risk Minimization (Santiago Mazuelas - BCAM)

Abstract:

The empirical risk minimization (ERM) approach for supervised learning chooses prediction rules that fit training samples and are “simple” (generalize). Such approach has been the workhorse of machine learning methods and has enabled a myriad of applications. However, ERM methods strongly rely on the specific training samples available and cannot easily address scenarios affected by distribution shifts and corrupted samples. Robust risk minimization (RRM) is an alternative approach that does not aim to fit training examples and instead chooses prediction rules minimizing the maximum expected loss (risk). This talk presents a learning framework based on the generalized maximum entropy principle that leads to minimax risk classifiers (MRCs). The proposed MRCs can efficiently minimize worst-case expected 0-1 loss and provide tight performance guarantees. In particular, MRCs are strongly universally consistent using feature mappings given by characteristic kernels. MRC learning is based on expectation estimates and does not strongly rely on specific training samples. Therefore, the methods presented can provide techniques that are robust to practical situations that defy conventional assumptions, e.g., training samples that follow a different distribution or are corrupted by noise.

Bio: Santiago Mazuelas received the Ph.D. in Mathematics and Ph.D. in Telecommunications Engineering from the University of Valladolid, Spain, in 2009 and 2011, respectively. Since 2017 he has been Ramon y Cajal Researcher at the Basque Center for Applied Mathematics (BCAM). Prior to joining BCAM, he was a Staff Engineer at Qualcomm Corporate Research and Development from 2014 to 2017. He previously worked from 2009 to 2014 as Postdoctoral Fellow and Associate in the Laboratory for Information and Decision Systems (LIDS) at the Massachusetts Institute of Technology (MIT). His general research interest is the application of mathematics to solve practical problems, currently his work is primarily focused on machine learning and statistical signal processing. He has received the Young Scientist Prize from the Union Radio-Scientifique Internationale (URSI) Symposium in 2007, and the Early Achievement Award from the IEEE ComSoc in 2018. His papers received the IEEE Communications Society Fred W. Ellersick Prize in 2012, the SEIO-FBBVA Best Applied Contribution in the Statistics Field in 2022, and Best Paper Awards from the IEEE ICC in 2013, the IEEE ICUWB in 2011, and the IEEE Globecom in 2011.

The empirical risk minimization (ERM) approach for supervised learning chooses prediction rules that fit training samples and are “simple” (generalize). Such approach has been the workhorse of machine learning methods and has enabled a myriad of applications. However, ERM methods strongly rely on the specific training samples available and cannot easily address scenarios affected by distribution shifts and corrupted samples. Robust risk minimization (RRM) is an alternative approach that does not aim to fit training examples and instead chooses prediction rules minimizing the maximum expected loss (risk). This talk presents a learning framework based on the generalized maximum entropy principle that leads to minimax risk classifiers (MRCs). The proposed MRCs can efficiently minimize worst-case expected 0-1 loss and provide tight performance guarantees. In particular, MRCs are strongly universally consistent using feature mappings given by characteristic kernels. MRC learning is based on expectation estimates and does not strongly rely on specific training samples. Therefore, the methods presented can provide techniques that are robust to practical situations that defy conventional assumptions, e.g., training samples that follow a different distribution or are corrupted by noise.

Bio: Santiago Mazuelas received the Ph.D. in Mathematics and Ph.D. in Telecommunications Engineering from the University of Valladolid, Spain, in 2009 and 2011, respectively. Since 2017 he has been Ramon y Cajal Researcher at the Basque Center for Applied Mathematics (BCAM). Prior to joining BCAM, he was a Staff Engineer at Qualcomm Corporate Research and Development from 2014 to 2017. He previously worked from 2009 to 2014 as Postdoctoral Fellow and Associate in the Laboratory for Information and Decision Systems (LIDS) at the Massachusetts Institute of Technology (MIT). His general research interest is the application of mathematics to solve practical problems, currently his work is primarily focused on machine learning and statistical signal processing. He has received the Young Scientist Prize from the Union Radio-Scientifique Internationale (URSI) Symposium in 2007, and the Early Achievement Award from the IEEE ComSoc in 2018. His papers received the IEEE Communications Society Fred W. Ellersick Prize in 2012, the SEIO-FBBVA Best Applied Contribution in the Statistics Field in 2022, and Best Paper Awards from the IEEE ICC in 2013, the IEEE ICUWB in 2011, and the IEEE Globecom in 2011.

Generalization Bounds via Convex Analysis (Gergely Neu - Universidad Pompeu Fabra)

Abstract:

Since the celebrated works of Russo and Zou (2016,2019) and Xu and Raginsky (2017), it has been well known that the generalization error of supervised learning algorithms can be bounded in terms of the mutual information between their input and the output, given that the loss of any fixed hypothesis has a subgaussian tail. In this work, we generalize this result beyond the standard choice of Shannon's mutual information to measure the dependence between the input and the output. Our main result shows that it is indeed possible to replace the mutual information by any strongly convex function of the joint input-output distribution, with the subgaussianity condition on the losses replaced by a bound on an appropriately chosen norm capturing the geometry of the dependence measure. This allows us to derive a range of generalization bounds that are either entirely new or strengthen previously known ones. Examples include bounds stated in terms of p-norm divergences and the Wasserstein-2 distance, which are respectively applicable for heavy-tailed loss distributions and highly smooth loss functions. Our analysis is entirely based on elementary tools from convex analysis by tracking the growth of a potential function associated with the dependence measure and the loss function.

Bio: Gergely Neu is a research assistant professor at the Pompeu Fabra University, Barcelona, Spain. He has previously worked with the SequeL team of INRIA Lille, France and the RLAI group at the University of Alberta, Edmonton, Canada. He obtained his PhD degree in 2013 from the Budapest University of Technology and Economics, where his advisors were András György, Csaba Szepesvári and László Györfi. His main research interests are in machine learning theory, with a strong focus on sequential decision making problems. Dr. Neu was the recipient of a Google Faculty Research award in 2018, the Bosch Young AI Researcher Award in 2019, and an ERC Starting Grant in 2020.

Since the celebrated works of Russo and Zou (2016,2019) and Xu and Raginsky (2017), it has been well known that the generalization error of supervised learning algorithms can be bounded in terms of the mutual information between their input and the output, given that the loss of any fixed hypothesis has a subgaussian tail. In this work, we generalize this result beyond the standard choice of Shannon's mutual information to measure the dependence between the input and the output. Our main result shows that it is indeed possible to replace the mutual information by any strongly convex function of the joint input-output distribution, with the subgaussianity condition on the losses replaced by a bound on an appropriately chosen norm capturing the geometry of the dependence measure. This allows us to derive a range of generalization bounds that are either entirely new or strengthen previously known ones. Examples include bounds stated in terms of p-norm divergences and the Wasserstein-2 distance, which are respectively applicable for heavy-tailed loss distributions and highly smooth loss functions. Our analysis is entirely based on elementary tools from convex analysis by tracking the growth of a potential function associated with the dependence measure and the loss function.

Bio: Gergely Neu is a research assistant professor at the Pompeu Fabra University, Barcelona, Spain. He has previously worked with the SequeL team of INRIA Lille, France and the RLAI group at the University of Alberta, Edmonton, Canada. He obtained his PhD degree in 2013 from the Budapest University of Technology and Economics, where his advisors were András György, Csaba Szepesvári and László Györfi. His main research interests are in machine learning theory, with a strong focus on sequential decision making problems. Dr. Neu was the recipient of a Google Faculty Research award in 2018, the Bosch Young AI Researcher Award in 2019, and an ERC Starting Grant in 2020.

Thursday, 27 October

Nonparametric Multiple-Output Center-Outward Quantile Regression (Eustasio del Barrio - Universidad de Valladolid)

Abstract:

This talk addresses the problem of non parametric multiple-output quantile regression based on the novel concept of multivariate center-outward quantiles introduced in Chernozhukov et al. (2017) and Hallin et al. (2021). To obtain conditional quantile regions and contours, the conditional center-outward quantiles are defined. A new cyclically monotone interpolation, with non-necessarily constant weights, is proposed to define them. This method is completely non-parametric and produces interpretable empirical regions/contours which converge in probability to its population counterpart. The presentation will include with some real and synthetic examples, showing the adaptation and applicability of the method, in particular its ability to catch the heteroskedasticity and the trend of the data.

Bio: Eustasio del Barrio is Professor of Statistics at the University of Valladolid (Spain). His research focuses on probability and mathematical statistics, with interest on probabilistic metrics and their statistical applications, in particular on metrics related to optimal transport. He is a member of the Executive Board of the Spanish Statistical Society (SEIO) and associate editor of TEST.

This talk addresses the problem of non parametric multiple-output quantile regression based on the novel concept of multivariate center-outward quantiles introduced in Chernozhukov et al. (2017) and Hallin et al. (2021). To obtain conditional quantile regions and contours, the conditional center-outward quantiles are defined. A new cyclically monotone interpolation, with non-necessarily constant weights, is proposed to define them. This method is completely non-parametric and produces interpretable empirical regions/contours which converge in probability to its population counterpart. The presentation will include with some real and synthetic examples, showing the adaptation and applicability of the method, in particular its ability to catch the heteroskedasticity and the trend of the data.

Bio: Eustasio del Barrio is Professor of Statistics at the University of Valladolid (Spain). His research focuses on probability and mathematical statistics, with interest on probabilistic metrics and their statistical applications, in particular on metrics related to optimal transport. He is a member of the Executive Board of the Spanish Statistical Society (SEIO) and associate editor of TEST.

Robust and Fair Multisource Learning (Christoph Lampert - Institute of Science and Technology Austria)

Abstract:

In the era of big data, the training data for machine learning models is commonly collected from multiple sources. Some of these might not be unreliable (noisy, biased, or even manipulated). Can learning algorithms overcome this and still learn predictors of optimal accuracy and ideally fairness? In my talk, I highlight recent results from our group that establish situations in which this is possible or impossible.

Bio: Christoph Lampert is a tenured professor at the Institute of Science and Technology Austria (ISTA). He heads a research group for Machine Learning and Computer Vision, and I am the director of our institute’s ELLIS Unit. Before joining IST Austria in 2010, he received a PhD in mathematics from the University of Bonn in 2003 and held postdoctoral positions at the German Research Center for Artificial Intelligence and the Max-Planck Institute for Biological Cybernetics. His research on computer vision and machine learning has won several international and national awards, including best paper prizes at CVPR, ECCV and DAGM. In 2012 he was awarded an ERC Starting Grant (consolidator phase) by the European Research Council. He is a member of the editorial boards of the International Journal of Computer Vision (IJCV) and the Journal for Machine Learning Research (JMLR), and a former Associate Editor in Chief of the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI).

In the era of big data, the training data for machine learning models is commonly collected from multiple sources. Some of these might not be unreliable (noisy, biased, or even manipulated). Can learning algorithms overcome this and still learn predictors of optimal accuracy and ideally fairness? In my talk, I highlight recent results from our group that establish situations in which this is possible or impossible.

Bio: Christoph Lampert is a tenured professor at the Institute of Science and Technology Austria (ISTA). He heads a research group for Machine Learning and Computer Vision, and I am the director of our institute’s ELLIS Unit. Before joining IST Austria in 2010, he received a PhD in mathematics from the University of Bonn in 2003 and held postdoctoral positions at the German Research Center for Artificial Intelligence and the Max-Planck Institute for Biological Cybernetics. His research on computer vision and machine learning has won several international and national awards, including best paper prizes at CVPR, ECCV and DAGM. In 2012 he was awarded an ERC Starting Grant (consolidator phase) by the European Research Council. He is a member of the editorial boards of the International Journal of Computer Vision (IJCV) and the Journal for Machine Learning Research (JMLR), and a former Associate Editor in Chief of the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI).

Towards Collective Intelligence in Heterogeneous Learning (Krikamol Muandet - CISPA)

Abstract:

Democratization of AI involves training and deploying machine learning models across heterogeneous and potentially massive environments. While a diversity of data can create new possibilities to advance AI systems, it simultaneously poses pressing concerns such as privacy, security, and equity that restricts the extent to which information can be shared across environments. Inspired by the social choice theory, I will first present a choice-theoretic perspective of machine learning as a tool to analyze learning algorithms in heterogeneous environments. To understand the fundamental limits, I will then provide a minimum requirement in terms of intuitive and reasonable axioms under which an empirical risk minimization (ERM) is the only rational learning algorithm in heterogeneous environments. This impossibility result implies that Collective Intelligence (CI), the ability of algorithms to successfully learn across heterogeneous environments, cannot be achieved without sacrificing at least one of these essential properties. Lastly, I will discuss the implications of this result in critical areas of machine learning such as out-of-distribution generalization, federated learning, algorithmic fairness, and multi-modal learning.

Bio: Krikamol Muandet is a tenure-track faculty member at CISPA Helmholtz Center for Information Security, Saarbrücken, Germany. Before joining CISPA, he was a research group leader in the Empirical Inference Department at the Max Planck Institute for Intelligent Systems (MPI-IS), Tübingen, Germany. He was a lecturer in the Department of Mathematics at Mahidol University, Bangkok, Thailand. He received his Ph.D. in computer science from the University of Tübingen in 2015 working mainly with Prof. Bernhard Schölkopf. He received his master's degree in machine learning from University College London (UCL), the United Kingdom where he worked mostly with Prof. Yee Whye Teh at Gatsby Computational Neuroscience Unit. He served as a publication chair of AISTATS 2021 and as an area chair for ICLR 2023, AISTATS 2022, NeurIPS 2021, NeurIPS 2020, NeurIPS 2019, and ICML 2019, among others. His research interests include kernel methods, kernel mean embedding of distributions, learning under distributional shifts, domain generalization, counterfactual inference, and how to regulate the deployment of machine learning models.

Democratization of AI involves training and deploying machine learning models across heterogeneous and potentially massive environments. While a diversity of data can create new possibilities to advance AI systems, it simultaneously poses pressing concerns such as privacy, security, and equity that restricts the extent to which information can be shared across environments. Inspired by the social choice theory, I will first present a choice-theoretic perspective of machine learning as a tool to analyze learning algorithms in heterogeneous environments. To understand the fundamental limits, I will then provide a minimum requirement in terms of intuitive and reasonable axioms under which an empirical risk minimization (ERM) is the only rational learning algorithm in heterogeneous environments. This impossibility result implies that Collective Intelligence (CI), the ability of algorithms to successfully learn across heterogeneous environments, cannot be achieved without sacrificing at least one of these essential properties. Lastly, I will discuss the implications of this result in critical areas of machine learning such as out-of-distribution generalization, federated learning, algorithmic fairness, and multi-modal learning.

Bio: Krikamol Muandet is a tenure-track faculty member at CISPA Helmholtz Center for Information Security, Saarbrücken, Germany. Before joining CISPA, he was a research group leader in the Empirical Inference Department at the Max Planck Institute for Intelligent Systems (MPI-IS), Tübingen, Germany. He was a lecturer in the Department of Mathematics at Mahidol University, Bangkok, Thailand. He received his Ph.D. in computer science from the University of Tübingen in 2015 working mainly with Prof. Bernhard Schölkopf. He received his master's degree in machine learning from University College London (UCL), the United Kingdom where he worked mostly with Prof. Yee Whye Teh at Gatsby Computational Neuroscience Unit. He served as a publication chair of AISTATS 2021 and as an area chair for ICLR 2023, AISTATS 2022, NeurIPS 2021, NeurIPS 2020, NeurIPS 2019, and ICML 2019, among others. His research interests include kernel methods, kernel mean embedding of distributions, learning under distributional shifts, domain generalization, counterfactual inference, and how to regulate the deployment of machine learning models.

Stochastic Optimization for Large-Scale Machine Learning: Variance Reduction, Acceleration, and Robustness to Noise (Julien Mairal - Inria Grenoble)

Abstract:

In this talk, we are interested in continuous optimization problems with a particular "large-scale" structure that prevents us from using generic optimization toolboxes or simply vanilla first- or second-order gradient descent methods. In such a context, all of these tools suffer indeed either from too large complexity per iteration, or too slow convergence, or both, which has motivated the machine learning community to develop dedicated algorithms. We will introduce several of such techniques, and in particular focus on stochastic optimization, which plays a crucial role for applying machine learning techniques to large datasets.

Bio: Julien Mairal is a research scientist at Inria Grenoble, where he leads the Thoth research team. He joined Inria Grenoble in 2012, after a post-doc in the statistics department of UC Berkeley. He received the Ph.D. degree from Ecole Normale Superieure, Cachan. His research interests include machine learning, computer vision, mathematical optimization, and statistical image and signal processing. In 2016, he received a Starting Grant from the European Research Council. He was awarded the Cor Baayen prize in 2013, the IEEE PAMI young researcher award in 2017 and the test-of-time award at ICML 2019.

In this talk, we are interested in continuous optimization problems with a particular "large-scale" structure that prevents us from using generic optimization toolboxes or simply vanilla first- or second-order gradient descent methods. In such a context, all of these tools suffer indeed either from too large complexity per iteration, or too slow convergence, or both, which has motivated the machine learning community to develop dedicated algorithms. We will introduce several of such techniques, and in particular focus on stochastic optimization, which plays a crucial role for applying machine learning techniques to large datasets.

Bio: Julien Mairal is a research scientist at Inria Grenoble, where he leads the Thoth research team. He joined Inria Grenoble in 2012, after a post-doc in the statistics department of UC Berkeley. He received the Ph.D. degree from Ecole Normale Superieure, Cachan. His research interests include machine learning, computer vision, mathematical optimization, and statistical image and signal processing. In 2016, he received a Starting Grant from the European Research Council. He was awarded the Cor Baayen prize in 2013, the IEEE PAMI young researcher award in 2017 and the test-of-time award at ICML 2019.

Nonconvex Min-Max Optimization: Fundamental Limits, Acceleration, and Adaptivity (Niao He - ETH Zurich)

Abstract:

Min-max optimization plays a critical role in emerging machine learning applications from training GANs to robust reinforcement learning, and from adversarial training to fairness. In this talk, we discuss some recent results on min-max optimization algorithms with a special focus on the modern nonconvex regime, including their fundamental limits, acceleration, and adaptivity. We introduce the first accelerated algorithms that achieve near-optimal complexity bounds as well as a family of adaptive algorithms with parameter-free adaptation under various problem settings.

Bio: Niao He is currently an Assistant Professor in the Department of Computer Science at ETH Zurich, where she leads the Optimization and Decision Intelligence (ODI) Group. She is also an ELLIS Scholar and a core faculty member of ETH AI Center, ETH-Max Planck Center of Learning Systems, and ETH Foundations of Data Science. Previously, she was an assistant professor at the University of Illinois at Urbana-Champaign from 2016 to 2020. Before that, she received her Ph.D. degree in Operations Research from Georgia Institute of Technology in 2015. Her research interests are in large-scale optimization, machine learning, and reinforcement learning. She is a recipient of AISTATS Best Paper Award, NSF CISE Research Initiation Initiative (CRII) Award, NCSA Faculty Fellowship, and Beckman CAS Fellowship. She regularly serves as an area chair for NeurIPS, ICLR, ICML and etc.

Min-max optimization plays a critical role in emerging machine learning applications from training GANs to robust reinforcement learning, and from adversarial training to fairness. In this talk, we discuss some recent results on min-max optimization algorithms with a special focus on the modern nonconvex regime, including their fundamental limits, acceleration, and adaptivity. We introduce the first accelerated algorithms that achieve near-optimal complexity bounds as well as a family of adaptive algorithms with parameter-free adaptation under various problem settings.

Bio: Niao He is currently an Assistant Professor in the Department of Computer Science at ETH Zurich, where she leads the Optimization and Decision Intelligence (ODI) Group. She is also an ELLIS Scholar and a core faculty member of ETH AI Center, ETH-Max Planck Center of Learning Systems, and ETH Foundations of Data Science. Previously, she was an assistant professor at the University of Illinois at Urbana-Champaign from 2016 to 2020. Before that, she received her Ph.D. degree in Operations Research from Georgia Institute of Technology in 2015. Her research interests are in large-scale optimization, machine learning, and reinforcement learning. She is a recipient of AISTATS Best Paper Award, NSF CISE Research Initiation Initiative (CRII) Award, NCSA Faculty Fellowship, and Beckman CAS Fellowship. She regularly serves as an area chair for NeurIPS, ICLR, ICML and etc.

Friday, 28 October

Fast Rates for Noisy Interpolation Require Rethinking the Effects of Inductive Bias (Fanny Yang - ETH Zurich)

Abstract:

Interpolating models have recently gained popularity in the statistical learning community due to common practices in modern machine learning: complex models achieve good generalization performance despite interpolating high-dimensional training data. In this talk, we prove generalization bounds for high-dimensional linear models that interpolate noisy data generated by a sparse ground truth. In particular, we first show that minimum-l1-norm interpolators achieve high-dimensional asymptotic consistency at a logarithmic rate. Further, as opposed to the regularized or noiseless case, for min-lp-norm interpolators with 1

Bio:

Fanny Yang is an Assistant Professor of Computer Science at ETH Zurich. She received her Ph.D. in EECS from University of California, Berkeley in 2018 and was a postdoctoral fellow at Stanford University and ETH-ITS in 2019. Her current research interests include methodological and theoretical advances for problems that arise from distribution shift or adversarial robustness requirements, studying the effects of interpolation on the (robust) generalization ability of overparameterized models and interpretability/explainability of neural networks.

Interpolating models have recently gained popularity in the statistical learning community due to common practices in modern machine learning: complex models achieve good generalization performance despite interpolating high-dimensional training data. In this talk, we prove generalization bounds for high-dimensional linear models that interpolate noisy data generated by a sparse ground truth. In particular, we first show that minimum-l1-norm interpolators achieve high-dimensional asymptotic consistency at a logarithmic rate. Further, as opposed to the regularized or noiseless case, for min-lp-norm interpolators with 1

Hyperplane Arrangement Classifiers in Overparameterized and Interpolating Settings (Clayton Scott - University of Michigan)

Abstract:

This talk will examine classifiers expressed in terms of arrangements of hyperplanes. The first part will demonstrate that such classifiers can be learned from training data by formulating them as a kind of partially quantized, overparametrized neural network. We show that unlike other neural networks analyzed in the literature, hyperplane arrangement neural networks can be meaningfully analyzed using classical VC theory. The second part of the talk will introduce an ensemble of hyperplane arrangement classifiers that interpolates the training data perfectly yet is also statistically consistent for a large class of data distributions.

Bio: Clay Scott received his PhD in Electrical Engineering from Rice University in 2004, and is currently Professor of Electrical Engineering and Computer Science at the University of Michigan. His research interests include statistical machine learning theory and algorithms, with an emphasis on nonparametric methods for supervised and unsupervised learning. He has also worked on a number of applications including brain imaging, nuclear threat detection, environmental monitoring, and computational biology. In 2010, he received the Career Award from the US National Science Foundation.

This talk will examine classifiers expressed in terms of arrangements of hyperplanes. The first part will demonstrate that such classifiers can be learned from training data by formulating them as a kind of partially quantized, overparametrized neural network. We show that unlike other neural networks analyzed in the literature, hyperplane arrangement neural networks can be meaningfully analyzed using classical VC theory. The second part of the talk will introduce an ensemble of hyperplane arrangement classifiers that interpolates the training data perfectly yet is also statistically consistent for a large class of data distributions.

Bio: Clay Scott received his PhD in Electrical Engineering from Rice University in 2004, and is currently Professor of Electrical Engineering and Computer Science at the University of Michigan. His research interests include statistical machine learning theory and algorithms, with an emphasis on nonparametric methods for supervised and unsupervised learning. He has also worked on a number of applications including brain imaging, nuclear threat detection, environmental monitoring, and computational biology. In 2010, he received the Career Award from the US National Science Foundation.

E is the New P: the E-value as a Generic Tool for Robust, Anytime Valid Hypothesis Testing and Confidence Intervals (Peter Grunwald - CWI & Leiden University)

Abstract:

How much evidence do the data give us about one hypothesis versus another? The standard way to measure evidence is still the p-value, despite a myriad of problems surrounding it. We present the E-value, a recently popularized notion of evidence which overcomes some of these issues. While E-values have lain dormant until 2019, their use has recently exploded - we just had a one-week workshop with attendees from netflix, booking (AB testing), clinical trial design and meta-analysis. E-values are also the basic building blocks of anytime-valid confidence intervals that remain valid under optional stopping and that are crucial for gaining trustworthy uncertainty quantification in bandit settings. In simple cases, the E-value coincides with the Bayes factor, the notion of evidence preferred by Bayesians. But if the null is composite or nonparametric, or an alternative cannot be explicitly formulated, E-values and Bayes factors become distinct and E-processes can be seen as a generalization of nonnegative supermartingales. Unlike the Bayes factor, E-values allow for tests with strict 'classical' Type-I error control under optional continuation and combination of data from different sources. They are also easier to interpret than p-values, having a straightforward interpretation in terms of sequential betting.

Bio: Peter Grünwald heads the machine learning group at CWI in Amsterdam, the Netherlands. He is also full professor of Statistical Learning at the mathematical Institute of Leiden University. From 2018-2022 he was President of the Association for Computational Learning, the organization running COLT, the world’s prime annual conference on machine learning theory, having chaired COLT himself in 2015. He also chaired UAI – another top ML conference – in 2010/2011. Apart from publishing at ML venues like NIPS, COLT and UAI, he regularly contributes to statistics journals such as the Annals of Statistics. He is the author of the book The Minimum Description Length Principle, (MIT Press, 2007), which has become the standard reference for the MDL approach to learning. In 2010 he was co-awarded the Van Dantzig prize, the highest Dutch award in statistics and operations research. He has frequently appeared in Dutch national media commenting, e.g., about statistical issues in court cases.

How much evidence do the data give us about one hypothesis versus another? The standard way to measure evidence is still the p-value, despite a myriad of problems surrounding it. We present the E-value, a recently popularized notion of evidence which overcomes some of these issues. While E-values have lain dormant until 2019, their use has recently exploded - we just had a one-week workshop with attendees from netflix, booking (AB testing), clinical trial design and meta-analysis. E-values are also the basic building blocks of anytime-valid confidence intervals that remain valid under optional stopping and that are crucial for gaining trustworthy uncertainty quantification in bandit settings. In simple cases, the E-value coincides with the Bayes factor, the notion of evidence preferred by Bayesians. But if the null is composite or nonparametric, or an alternative cannot be explicitly formulated, E-values and Bayes factors become distinct and E-processes can be seen as a generalization of nonnegative supermartingales. Unlike the Bayes factor, E-values allow for tests with strict 'classical' Type-I error control under optional continuation and combination of data from different sources. They are also easier to interpret than p-values, having a straightforward interpretation in terms of sequential betting.

Bio: Peter Grünwald heads the machine learning group at CWI in Amsterdam, the Netherlands. He is also full professor of Statistical Learning at the mathematical Institute of Leiden University. From 2018-2022 he was President of the Association for Computational Learning, the organization running COLT, the world’s prime annual conference on machine learning theory, having chaired COLT himself in 2015. He also chaired UAI – another top ML conference – in 2010/2011. Apart from publishing at ML venues like NIPS, COLT and UAI, he regularly contributes to statistics journals such as the Annals of Statistics. He is the author of the book The Minimum Description Length Principle, (MIT Press, 2007), which has become the standard reference for the MDL approach to learning. In 2010 he was co-awarded the Van Dantzig prize, the highest Dutch award in statistics and operations research. He has frequently appeared in Dutch national media commenting, e.g., about statistical issues in court cases.

Parameter Identifiability and Structure Learning for Linear Gaussian Graphical Models (Carlos Amendola - Berlin Institute of Technology)

Abstract:

Linear structural equation models are multivariate statistical models that are defined by specifying noisy linear functional relationships among random variables. These relationships can be encoded in a directed graph. In this talk we consider the classical case of additive Gaussian noise terms and study the problem of injectivity of the model parametrization, as well as learning the structure of the directed graph from data. While much work has been done in the acyclic case of Bayesian networks, we also present results that can be obtained in the presence of directed cycles, and illustrate this approach on protein expression datasets.

Bio: Carlos Enrique Améndola Cerón grew up in Mexico City and, fascinated by the interactions between "pure" and "applied" Math, simultaneously pursued undergraduate degrees in both (at Universidad Nacional Autónoma de México and at Instituto Tecnológico Autónomo de México, respectively). He did graduate studies at New York University before moving to Germany to do research in algebraic statistics under the supervision of Bernd Sturmfels. After postdoctoral positions at the Technical University of Munich and the Max Planck Institute for Mathematics in the Sciences in Leipzig, Carlos just started a research group in "Algebraic and Geometric Methods in Data Analysis" at the Berlin Institute of Technology. The topics of interest include algebraic statistics, nonlinear algebra and applied algebraic geometry.

Linear structural equation models are multivariate statistical models that are defined by specifying noisy linear functional relationships among random variables. These relationships can be encoded in a directed graph. In this talk we consider the classical case of additive Gaussian noise terms and study the problem of injectivity of the model parametrization, as well as learning the structure of the directed graph from data. While much work has been done in the acyclic case of Bayesian networks, we also present results that can be obtained in the presence of directed cycles, and illustrate this approach on protein expression datasets.

Bio: Carlos Enrique Améndola Cerón grew up in Mexico City and, fascinated by the interactions between "pure" and "applied" Math, simultaneously pursued undergraduate degrees in both (at Universidad Nacional Autónoma de México and at Instituto Tecnológico Autónomo de México, respectively). He did graduate studies at New York University before moving to Germany to do research in algebraic statistics under the supervision of Bernd Sturmfels. After postdoctoral positions at the Technical University of Munich and the Max Planck Institute for Mathematics in the Sciences in Leipzig, Carlos just started a research group in "Algebraic and Geometric Methods in Data Analysis" at the Berlin Institute of Technology. The topics of interest include algebraic statistics, nonlinear algebra and applied algebraic geometry.